Metrics

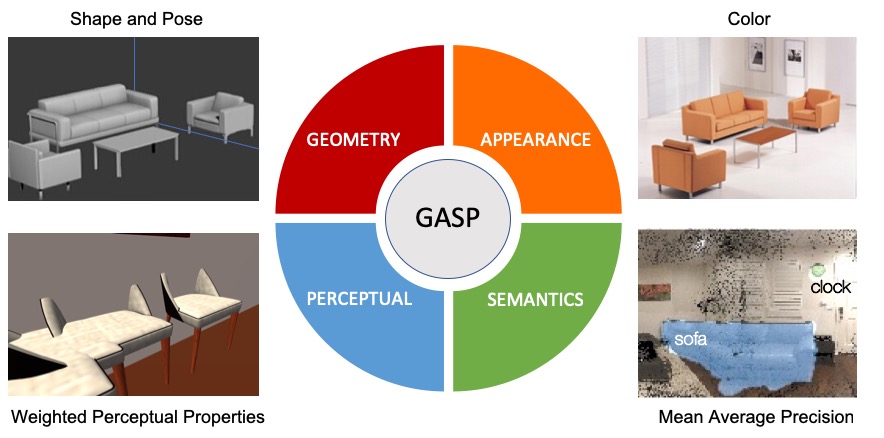

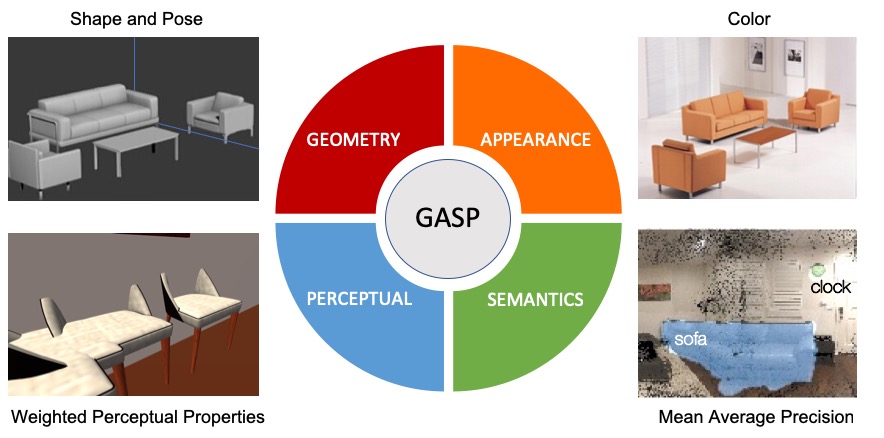

Evaluation of a 3D scene focuses on 4 keys aspects: Geometry, Appearance, Semantic, and Perceptual (GASP).

Details of the metrics for each track are provided in the SUMO white paper.

The SUMO Challenge targets the development of algorithms for comprehensive understanding of 3D indoor scenes from 360° RGB-D panoramas. The target 3D models of indoor scenes include all visible layout elements and objects complete with pose, semantic information, and texture. Algorithms submitted are evaluated at 3 levels of complexity corresponding to 3 tracks of the challenge: oriented 3D bounding boxes, oriented 3D voxel grids, and oriented 3D meshes. SUMO Challenge results will be presented at the 2019 SUMO Challenge Workshop, at CVPR.

April 10: The organizers of the SUMO Challenge have received information which has led us to choose to cease distribution of the SUMO Challenge data. We are not able to give further details at this moment. In light of this information, we have chosen to postpone the SUMO Challenge at this time. We are hopeful that the challenge can resume once this issue is addressed.

The good news is that the 2019 SUMO Workshop will go on as planned. We have a great lineup of speakers, and we encourage you to submit papers related to 3D scene understanding and computer vision using 360 degree imagery. For more information, visit the workshop web site.

The SUMO challenge dataset is derived from processing scenes from the SUNCG dataset to produce 360° RGB-D images represented as cubemaps and corresponding 3D mesh models of all visible scene elements. The mesh models are further processed into a bounding box and voxel-based representation. The dataset format is described in detail in the SUMO white paper.

1024 X 1024 RGB images

1024 X 1024 Depth Maps

2D Semantic Information

3D Semantic Information

3D Object Pose

3D Element Texture

3D Bounding Boxes Scene Representation

3D Voxel Grid Scene Representation

3D Mesh Scene Representation

The SUMO Challenge is organized into three performance tracks based on the output representation of the scene. A scene is represented as a collection of elements, each of which models one object in the scene (e.g., a wall, the floor, or a chair). An element is represented in one of three increasingly descriptive representations: bounding box, voxel grid, or surface mesh. For each element in the scene, a submission contains the following outputs listed per track. To get started, download the toolbox and training data from the links below. Visit the SUMO360 API web site for documentation, example code, and additional help.

3D Bounding Box

3D Object Pose

Semantic Category of Element

3D Bounding Box

3D Object Pose

Semantic Category of Element

Location and RGB Color of Occupied 3D Voxels

3D Bounding Box

3D Object Pose

Semantic Category of Element

Element's textured mesh (in .glb format)

Submit your SUMO Challenge results with just a few easy steps.

{ "result": "[some-public-url]/[filename].zip" }{ "result": "https://foobar.edu/sumo-submission.zip" }

Evaluation of a 3D scene focuses on 4 keys aspects: Geometry, Appearance, Semantic, and Perceptual (GASP).

Details of the metrics for each track are provided in the SUMO white paper.

Winners of the 2019 SUMO Challenge will be announced at the CVPR SUMO Challenge Workshop, which will be held Jun 16th or 17th. See the official SUMO Challenge Contest Rules.

$2500 cash prize

Titan X GPU

Oral Presentation

$2000 cash prize

Titan X GPU

Oral Presentation

$1500 cash prize

Titan X GPU

Oral Presentation

The 2019 SUMO Challenge is generously sponsored by Facebook AI Research.